This is Part II of my response to

Santhosh Challenge. Part I is

HERE

8. If you are the solution architect for a retail website which has to be developed; what kind of questions would you ask with respect to “Scalability” purpose with respect to “Technology” being used for the website?

Here are a few questions on Scalability w.r.t Technology:

1. Can Technology take the current load of customers visiting the website?

2. Can technology handle an increasing load of customers per unit of time?

3. What is the maximum threshold load the technology can handle?

4. What is the maximum load under which the technology performs or renders itself optimally without affecting any degradation in website usage?

5. Is the technology easily customizable with additional infrastructure?

6. Does technology have any scalability limitations per se?

7. Does technology blend itself with the programming languages used for coding the website?

8. Does technology blend well with the hardware, software and middleware used?

9. Is technology portable if there is a need to build the website on multiple platforms over a period of time?

Any system is meant to be scalable if it continues to accept large amount of load and operate normally without adding additional configuration costs.

Let me assume to work for one of the biggest retail giants in the world with retail business spread across multiple continents. Let’s understand what they need as part of basic infrastructure:

Hardware

Data Warehouses

Content Management Systems

Mainframe and Unix Servers for running batch jobs at regular intervals

Middle ware for data transfer between multiple components

Marketing management tools

Email management tools

Data storage devices

Multi-processor distributed systems

Software

Server operating systems

Databases

Customer facing applications

Marketing applications

Marketing management tools

Email management tools

In a typical scenario, if a performance problem surfaces, what does the development team do? They increase the infrastructure to mitigate the problem. This is an easy way to temporarily shoo away the performance problem. Over a period of time, if the management decides to keep contribution margin of the product intact, adding additional infrastructure will become a problem.

What has scalability to do with Infrastructure when the question explicitly asks about technology scalability?

I strongly believe that technology and infrastructure must go hand in hand to be able to make any software solution scalable. If technology is scalable, but the infrastructure setup is pretty bad, there is a problem. If infrastructure is on par as expected, but technology isn’t scalable, that is a problem too.

Let’s consider scalability in a web service scenario. If we had to scale the webservice usage to a large set of customers over a period of time, the technology must suitably allow it. If I said, I’ll write a simple batch script for resource allocation in above scenario, it may or may not be scalable. if same solution had to be written using a framework belonging to solid programming language, may be there is more hope.

In short, scalability is good only when there is a right mix of technology and infrastructure. Both cannot be mutually exclusive to make any system scalable.

NOTE: I am not happy with my answer on this one. I liked the one written by Markus Gartner.

9. How do you think “Deactivate Account” should work functionally keeping in mind about “Usability” & “Security” quality criteria?

I have been unable to close one of my bank accounts as they have a lengthy de-activation process - an application form, returning the security device and remaining check leaves if any. Initially, I was annoyed as I didn’t want to spend time going to the bank and they were not taking my verbal confirmation on phone seriously. After a while, I realised that if de-activation was so simple, I could de-activate anyone’s account if I knew their customer number. It’s important to keep de-activation process as secure and fool-proof as possible.

If a user decides to de-activate his account on the website, it can be done using following steps:

Step 1: Identify the user

Identify if the user has a valid account by asking for username/email address and validating accordingly

Step 2: Authenticate the user

Authenticate the user by asking the user to reveal some information that is unique to that user. This way, we hope that one could be doubly sure that the right account is de-activated. Having a captcha in this step prevents bots from miss-using this feature.

Step 3: De-activation process

Initiate de-activation if user provides valid information by sending an email with a de-activation link. Note that this hyperlink must be limited to one time use.

Step 4: Confirmation of de-activation process

User needs to click on deactivation link in the email to de-activate the account

The above steps are reasonably secure from security point of view as well as usability point of view as the steps are simple and easy to follow.

10. For every registration, there is an e-mail sent with activation link. Once this activation link is used account is activated and a “Welcome E-mail” is sent to the end-users e-mail inbox. Now, list down the test ideas which could result in spamming if specific tests are not done.

1. Click on the activation hyperlink received in email inbox multiple times. If this action was tied to another action where an email is sent to the user ‘Welcoming the user’, then each time user clicked on this hyperlink, an email would be sent to the user

2. Once the activation hyperlink opens and confirms that activation has succeeded, refresh this page using “Refresh/Reload” option. If the refresh of this page was tied to an action where an email is sent to the user ‘Welcoming the user’, then user could get spammed

3. Once the activation hyperlink opens and confirms that activation has succeeded, refresh this page using ‘Reload Every’ add-on to spam the user. This is a variation of Step 2 above.

4. If the registration page does not check for already registered email addresses, registration can be done multiple times using same email address, hence spamming the user using this option. This step when combined with 1, 2 and 3 above can be used to spam the user to a large extent

5. In the registration page, enter same email address multiple times separated by commas. Any loophole in the application could consider these email addresses and send same activation hyperlink to same email address multiple times. Note that this scenario is not directly linked to the above question.

11. In what different ways can you use “Tamper Data” add-on from “Mozilla Firefox” web browser? If you have not used it till date then how about exploring it and using it; then you can share your experience here.

1. Viewing client requests - Tamper data can be used to view all requests sent from client to server

2. Parameter tampering - Can be used to tamper with input parameters before submitting them to server

3. Security Testing - Can be used to tamper http methods (headers and parameters) and used to security test client requests to servers

4. Cookies/Session ids - Can be used to view and tamper with cookies / session ids and hijack other user sessions

Pros

1. Tamper Data is an add-on that is accessible wherever browsers are installed

2. It’s easy to setup and use if Firefox browser is installed

Cons

1. Tamper Data is not as powerful as Burp Suite :-)

12. Application is being launched in a month from now and management has decided not to test for “Usability” or there are no testers in the team who can perform it and it is a web application. What is your take on this?

Usability testing is treated like a step child in most organizations. Usability testing often becomes a last minute dump task which could be done if and only if so called "functional testing" is complete. We all know that complete testing is exhaustive and impossible. As a result, there may be little or no time for usability testing.

If I am part of this project, I would identify a tester or a group of testers who I think have a decent knowledge of usability. I would help them learn about usability heuristics and become well-versed in these areas. Another good learning method would be to provide sample websites to test for usability, evaluate their reports and provide feedback. Based on this, I would hope they'll do a good job testing the web application above.

As I write this, I am aware that some people reading this will think, "Where is the time to do all of the above when there is hardly any time left to test for usability?” I completely empathize with such people. I have been there and done that. In such projects, it makes sense to provide "On the Job" training. It's important to identify at least one person who has good understanding of usability in general and usability testing in particular. **This person must set up a usability testing team and do one or more of the following:

Hallway Testing

Employ a few people walking down the hallway to test websites. Hand pick users from different walks of life and find out what irritates them as they use the websites.

Recorded Surveys

Record the proceedings as user uses the website and talks about pain points. Show the findings to web designers and work on how websites can be designed differently to ease those pain points.

Emphasis on Feelings

Magnifying user’s feelings (good and bad) as they use the websites helps gauge what makes good websites good and bad websites bad. Users’ feelings are fragile and it’s important for websites to handle these feelings with care.

**Adapted from my article "My Story of Website Usability" published in January 2012 edition of Testing Circus magazine.

13. Share your experience wherein; the developer did not accept security vulnerability and you did great bug advocacy to prove that it is a bug and finally it was fixed. Even if it was not fixed then please let me know about what was the bug and how did you do bug advocacy without revealing the application / company details.

Security Vulnerability

I worked on a multi-component project where each component was owned by different teams. Our team owned component A and another team owned component B. Component A was responsible for storing confidential information at a single location. This data would be requested by multiple consumers and processed accordingly. Since this was a closely watched system with restricted privileges to users, no security measures were taken on this component. The problem arrived when different components had to request for data from component A. Component B, being the first in line requested for data. Component A gave away the data as the requestor on component B was a trusted guy. However this data request and response wasn’t secured:

1. Data received at component B had a local copy of confidential data even though it was not supposed to store any of this data.

Team Ownership

The security vulnerability mentioned above was a bigger challenge given the fact that multiplicity of teams were involved with tons of ego floating around, “This is not my component’s problem, it’s yours” to “Your implementation sucks”. People involved hardly got into the details of the problem and the impact it could have if these components were shipped as is.

Bug Advocacy

As a tester, it was important for me to understand the impact of above problem even before I could advocate fixing of these problems. I got in touch with a couple subject matter experts who have worked on similar projects and asked for inputs. I initiated a dialog with a couple security architecture teams to understand the implications. Around the same time, I gathered feedback about why Team A and Team B have to work together to fix this problem and avoid working in silos. Based on all the information I had, I convened a meeting and discussed these problems with all team owners. Eventually, the bug was accepted as a problem and fixed accordingly.

14. What do you have in your tester’s toolkit? Name at least 10 such tools or utilities. Please do not list like QTP, Load Runner, Silk Test and such things. Something which you have discovered (Example: Process Explorer from SysInternals) on your own or from your colleague. If you can also share how you use it then it would be fantastic.

1. Notepad ++ - for taking notes

2. Burp suite - for tracking http request

3. Beyond Compare - comparing files/folders for Build Verification testing

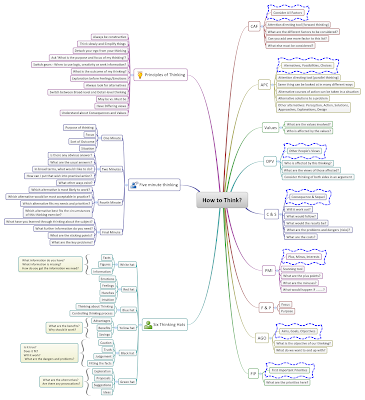

4. XMind - mind mapping tool for project planning, infrastructure planning, test planning, test status reporting and test release documentation

5. Process Explorer - for tracking processes

6. Task Manager - for tracking processes and tasks

7. Batch scripts to execute mundane testing tasks

8. Windows Scheduled tasks to automate windows based tasks. Eg. running a server installation batch script daily at a specified time :-)

9. Microsoft Excel - reporting

10. Browser Add-ons

a. Firebug

b. Web Developer

c. Tamper Data

d. iMacros

e. Resolution Test

f. And others at http://moolya.com/blog/2011/03/04/addon-mindmap-for-testers-from-moolya/

15. Let us say there is a commenting feature for the blog post; there are 100 comments currently. How would you load / render every comment. Is it one by one or all 100 at once? Justify.

Option 1 - Loading comments one after another

Given the attention span of a human being and his thirst for the very next comment, loading one message at a time is a bad idea. It’s bad user experience because the application has to assume a set number of seconds for reading one comment to be able to load the next comment after ‘x’ seconds. This is a delay that might be acceptable for few and not acceptable for others. Many of us are curious enough to read the next comment even before the previous one completes. Given this user behaviour, loading one comment at a time is a bad idea.

Option 2 - Loading all 100 comments at one shot

Loading all 100 comments at one shot means there could be a performance overhead. Assume that each comment contains close to 30 words. Suppose, it takes about 6 seconds to load on a machine with 512 MB ram (Well, I have one at home ;-)). Loading 100 comments on the page means 100X6=600 which is 10 minutes. 10 minutes is HUGE time for a comments page to load. User would run away from this page. Loading all comments simultaneously is a poor idea. Moreover, this solution is not scalable as the number of comments increase over a period of time.

Option 3 - Gradual loading of a designated number of comments

Loading fewer comments while the user reads the previous ones is good design. Suppose 10 messages load at a time. By the time user starts going to the bottom of the page to read 8th message, the next set of 10 messages must get loaded slowly. This way, loading the comments is phased out and user’s attention is not lost too. I believe performance overhead is minimized as there is no stress on the system to load all messages at one shot. This is a scalable solution. Eg. “More” option on Twitter web interface

I would go for Option 3 above. Again this suits me as a user. If the context demands that comments have to load to satisfy specific user requirements, designers could still go for Option 1 or 2 above. Context rules!

16. Have you ever done check automation using open-source tools? How did you identify the checks and what value did you add by automating them? Explain.

I have used Microsoft ACT, Autoit and iMacros add-on for check automation.

How did you identify the checks?

Checks are tests that don’t need human thinking to decide the next course of action. A few lines of code can accomplish the same if coded well and remove human intervention.

To quote Ben Simo, “Automated checking can only process whatever decision rules someone thought to program when the checks were created. … Rather than look at testing as something to be either manual or automated, I encourage people to look at individual tasks that are part of testing and try to identify ways that automation can help testers evaluate software.”

What value was added?

Suppose, you need to create about 30 test email accounts. You could use iMacros tool and record the registration process. If a captcha is present, the program can be automated until captcha is encountered and then processed after captcha value is entered by any human.

I have been part of a team that used Microsoft ACT to write basic performance scripts to test performance tuning products. I wasn’t involved directly with coding, but using these scripts helped identify performance problems in the product we were testing. And of course, it saved a lot of time doing the same set of tests manually.

I have used Auto IT to execute Server Installation and Configuration for one of my projects. Auto IT script along with Batch scripting was used to automate server installation process which manually took 1 full day. With this script, the installation was run overnight and the server used to be ready the next day morning.

In general, check automation helps automate mundane and routine tasks and use the saved time to test features that need humans to think and decide the next source of action.

17. What kind of information do you gather before starting to test software? (Example: Purpose of this application)

Software information

1. What is the problem that this application is expected to solve. i.e., purpose of the software :-)

2. What is the history of this application?

3. Is there an existing application that was built for the same purpose, but failed to solve the problem. If yes, what were the limitations in that application?

4. What is the technology this application is built using?

5. Is there a competitor application already? If yes, what are they good at and what are they bad at.

6. What are the business objectives set for this application

7. What are the constraints in building this application

8. What are the features that are agreed upon to be built

9. Which features are prioritized over others

User information

1. Who are the users of this application?

2. Are the developers (testers, programmers and concerned support teams) of the application aware of how this application will be used?

3. What is the single most burning problem that they are facing - for which this application is built

4. What are the constraints (permissions and privileges) under which users have to use the application

Documentation

1. Existing documents about the application

2. New requirements documents

3. Online help (if any)

4. Documents relating competitor products

5. Researching on similar applications

People Knowledge

1. Talking to following folks can fetch more information about application

2. Actual stakeholders of the product (Business teams)

3. Sales professionals

4. Marketing professionals

5. Business/Functional analysts

6. Solution architects

7. Programmers (Give them a warm hug everyday, LOL!)

8. Experts who are experts on respective technology and applications

9. Domain experts

10. Support teams

11. Infrastructure teams

12. Senior management

13. Fellow testers

And of course, tons of meetings :-).

18. How do you achieve data coverage (Inputs coverage) for a specific form with text fields like mobile number, date of birth etc? There are so many character sets and how do you achieve the coverage? You could share your past experience. If not any then you can talk about how it could be done.

Data coverage is in exhaustive as its impossible to cover for all inputs as the number of variables increases in the system. For eg, if we have to test with different types of mobile numbers across the world, imagine the number of tests that need to be executed.

What needs to be done in such situations is to identify a sample of the data set that can be used as test data. This sample must be an optimal subset that'll cover most of the heavily used test data formats. There are several tools that can be used to generate test data. These tools not only generate a decent sample of test data, but also support multiple character sets, languages, special characters and many other features. Following is a summary of a few of those.

GenerateData.com

Test Data generation

1. Names

2. Phone numbers

3. Email addresses

4. Cities

5. States

6. Provinces

7. Countries

8. Dates

9. Street addresses

10. Postal zip code

11. Number ranges

12. Alphanumeric strings

13. Country specific data (state / province / county) for US, Canada, UK etc

14. Auto-increment

15. Fixed number of words

16. Random number of words

Test File generation

1. XML

2. Excel

3. HTML

4. CSV

5. SQL

Hexawise

1. Pair wise testing using multiple variables

2. Valid pairs

3. Invalid pairs

And a lot more at http://hexawise.com/.

Allpairs

AllPairs helps with Pair wise testing. For eg, if your product needs to be tested on 3 different browsers of 2 version each, your “All Pairs” data is a tool that can be used. More at http://satisfice.com/tools.shtml.

Testomate

To be released. I have been a beta tester on this and it’s pretty impressive :-)

Feedback welcome, as always,

Regards,

Pari